Photo by Hal Gatewood on Unsplash

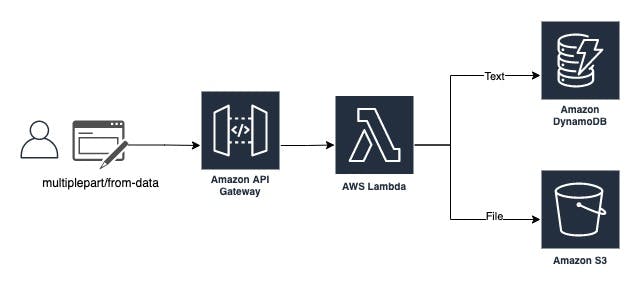

multiplepart/from-data: AWS API Gateway + Lambda

How to handle binary & string data types using Python

Table of contents

Introduction

This article covers the steps to accept binary data via multipart/form-data with AWS API-Gateway (REST) & Lambda using Python.

There will be authentication, authorisation, and probably a WAF involved in production. Still, in this blog, we will cover the steps directly linked with the handling of binary data type.

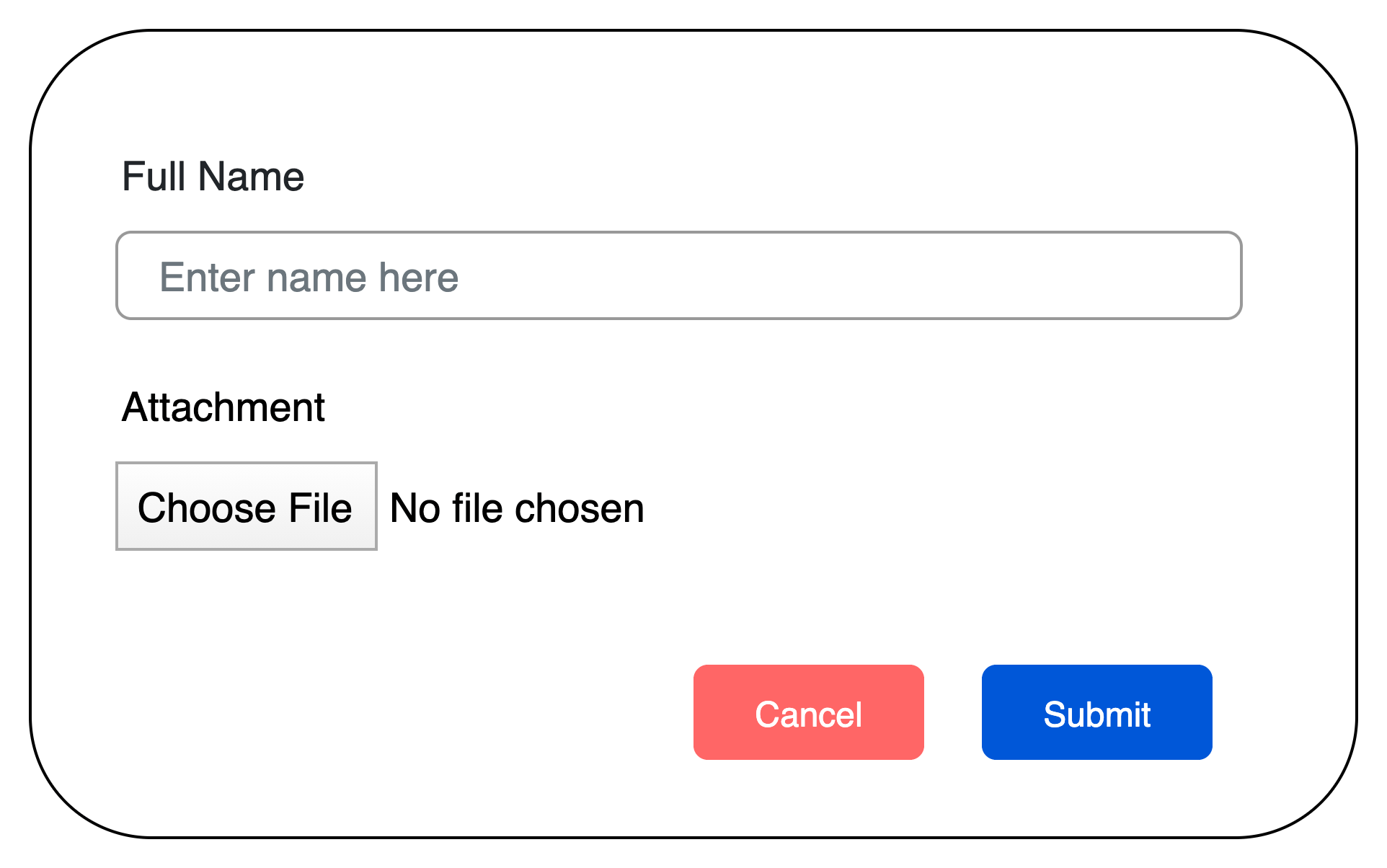

We will consider a simple form with a text field and file upload option in our example.

Lambda Setup

We will start with Lambda set up and then link this Lambda with the API Gateway during setup. Below is an example code for reference only, as I've to remove additional code to keep it simple and focused on handling multipart/form-data.

from streaming_form_data import StreamingFormDataParser

from streaming_form_data.targets import ValueTarget

import base64

import boto3

# Instantiate a table resource object for table name "app_data"

dynamodb = boto3.resource("dynamodb")

app_table = dynamodb.Table("app_data")

# Instantiate a S3 resource object

s3 = boto3.resource("s3")

def lambda_handler(event, context):

# Initiate the parser with request header

parser = StreamingFormDataParser(headers=event['params']['header'])

# As the form has 2 fields: 1x Text & 1x File.

# Here we initiate two ValueTarget to hold values in memory.

user_full_name = ValueTarget()

uploaded_file = ValueTarget()

# Register function will link the multiplepart/from-data key name

# with the value targets created above.

parser.register("name", user_full_name)

parser.register("file", uploaded_file)

#decode event body passed by the API

mydata = base64.b64decode(event["body"])

# parse the decoded body based on registers defined above.

parser.data_received(mydata)

# covert binary value to UTF-8 format.

# Optionally add the "USER#" tag (DynamoDB design requirement).

data = "USER#" + user_full_name.value.decode("utf-8")

# For S3 bucket 'mybucket' here we create a folder with value of

# 'data' and 'file.p12' inside.

filename = data + "/file.p12"

s3_file = s3.Object('mybucket', filename)

# upload file on S3 bucket

s3_file.put(Body=uploaded_file.value)

response = app_table.put_item(

Item={

'p_id': data,

# If usecase conforms with DynamoDB object size limit of 400KB

# below line allow saving the file in DynamoDB instead of S3.

'attachment': uploaded_file.value

}

)

return response

The lambda uses the "streaming_form_data " pip package. I've used "Lambda Layer" to include this package. Please refer to my other post on Lambda layers for details on using this feature.

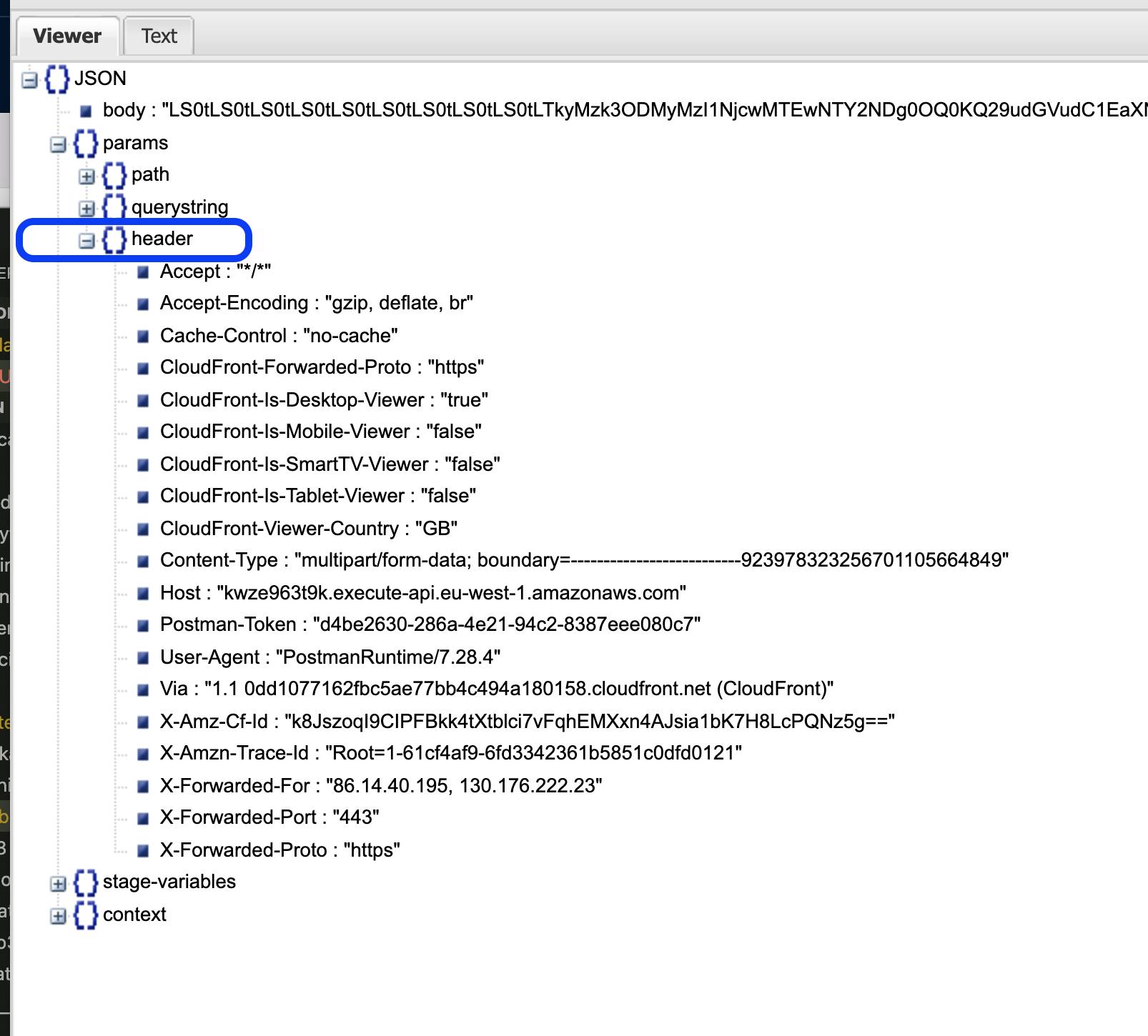

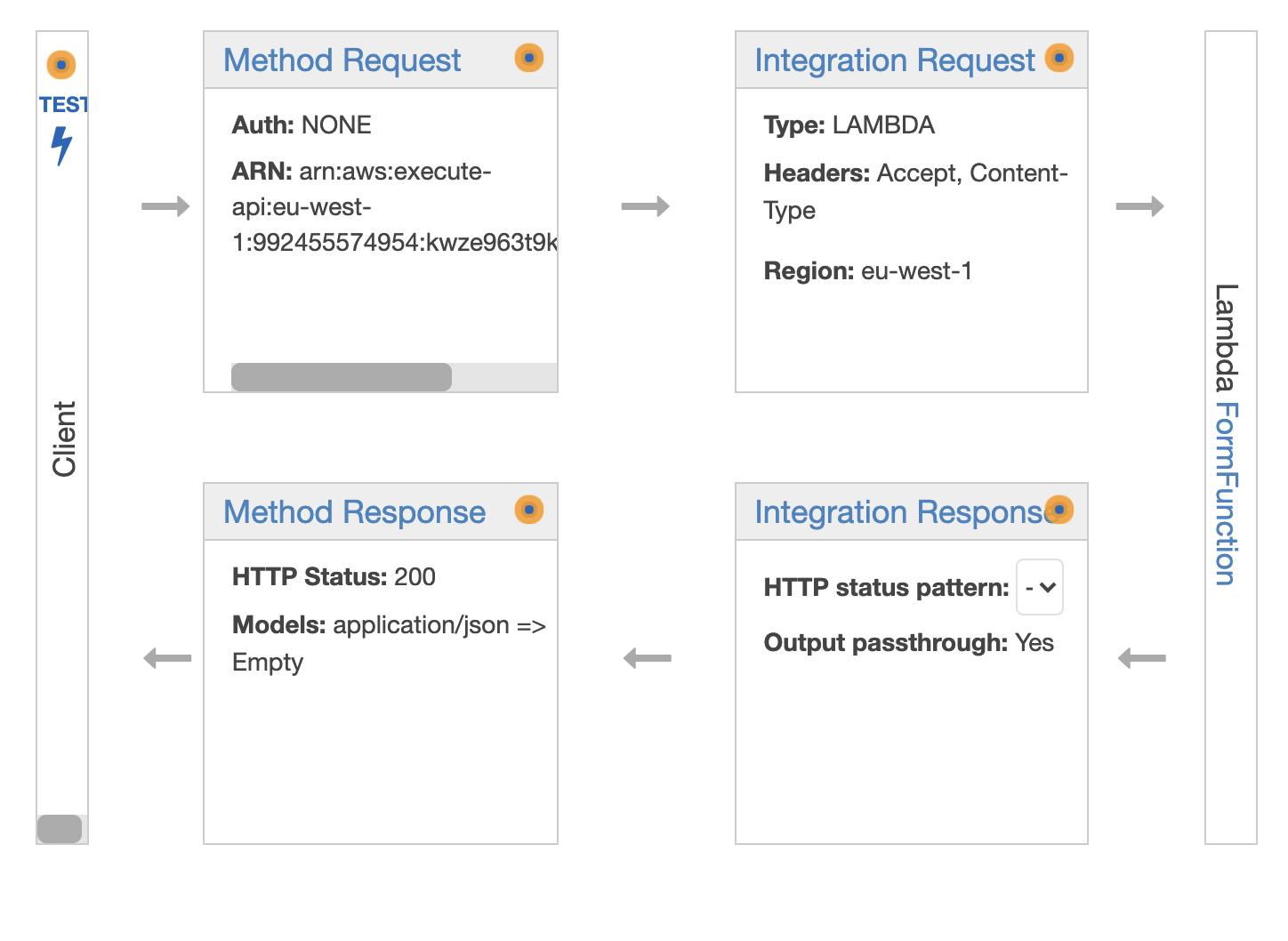

Its important to correctly refer to the header section in your event. This is done in the first line of the lambda function:

parser = StreamingFormDataParser(headers=event['params']['header'])

If you are unsure, comment all the lines and print the whole event from Lambda. This will allow checking the actual event dictionary in the Cloudwatch logs.

As detailed by the screenshot below, in my case, the header section was located at event[params][header]

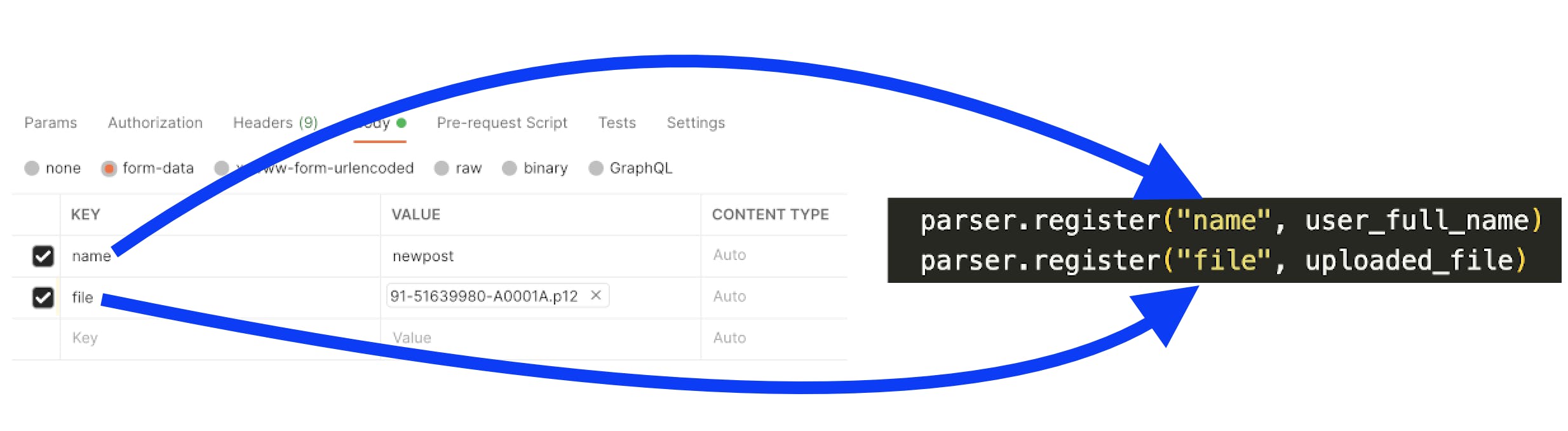

The next set of data mapping is at the parser.register stage:

parser.register("name", user_full_name)

parser.register("file", uploaded_file)

The API key name should match the first argument of the parser.register, and the second argument is the valuetarget() name.

After this, we follow the standard process to decode and parse the body. This will add the values to the ValueTargets objects we created earlier.

user_full_name.value & uploaded_file.value represents the binary value of the respective form fields. For the text field, we further decode it as "UTF-8", and the file we save it as binary.

The code further provides examples to store the data on S3 or DynamoDB. Please make sure the Lambda function's assigned role has required permissions for S3 & DynamoDB read/write.

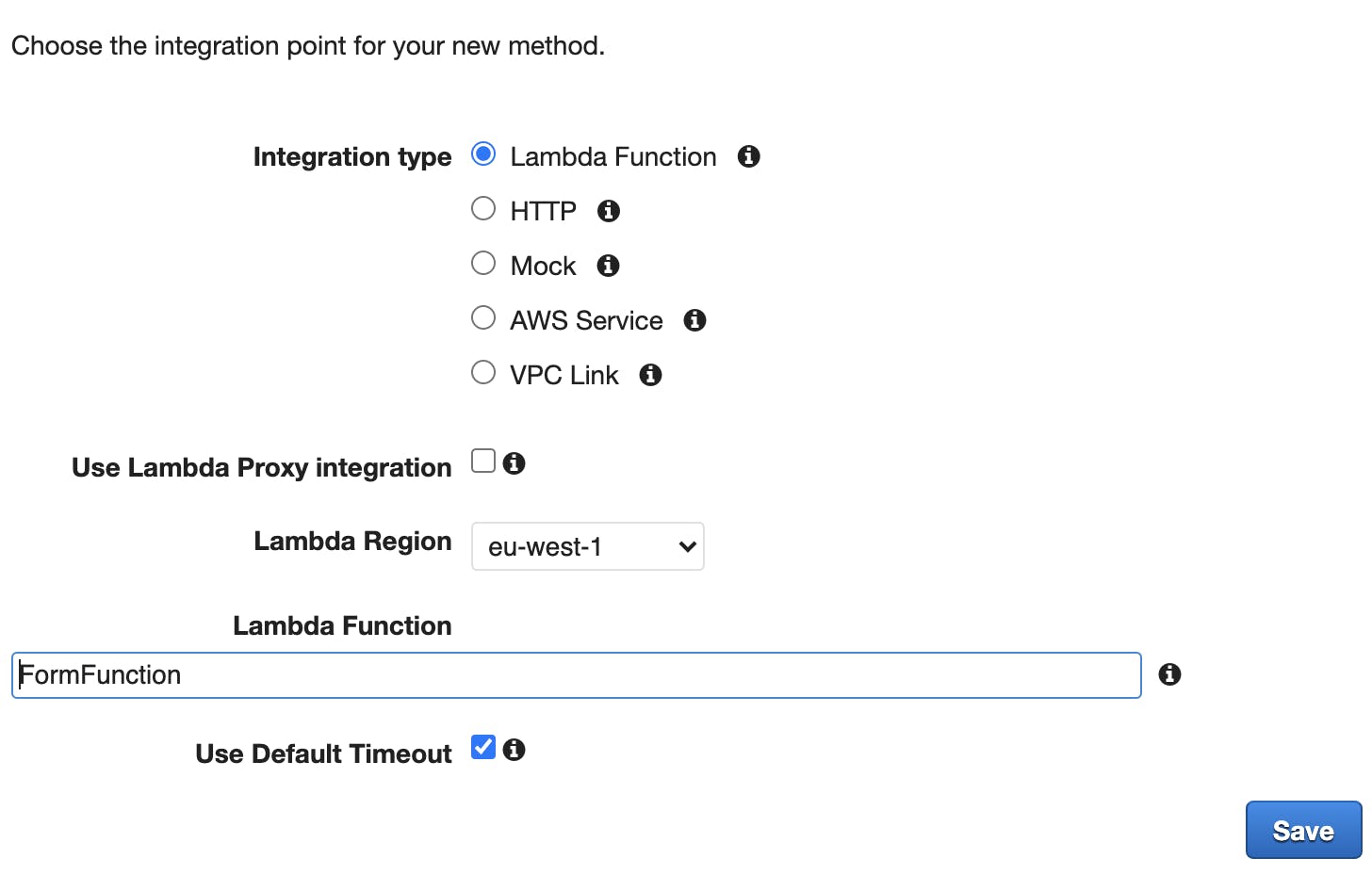

API Gateway Setup

We will create an API Gateway with Lambda integration type. Add

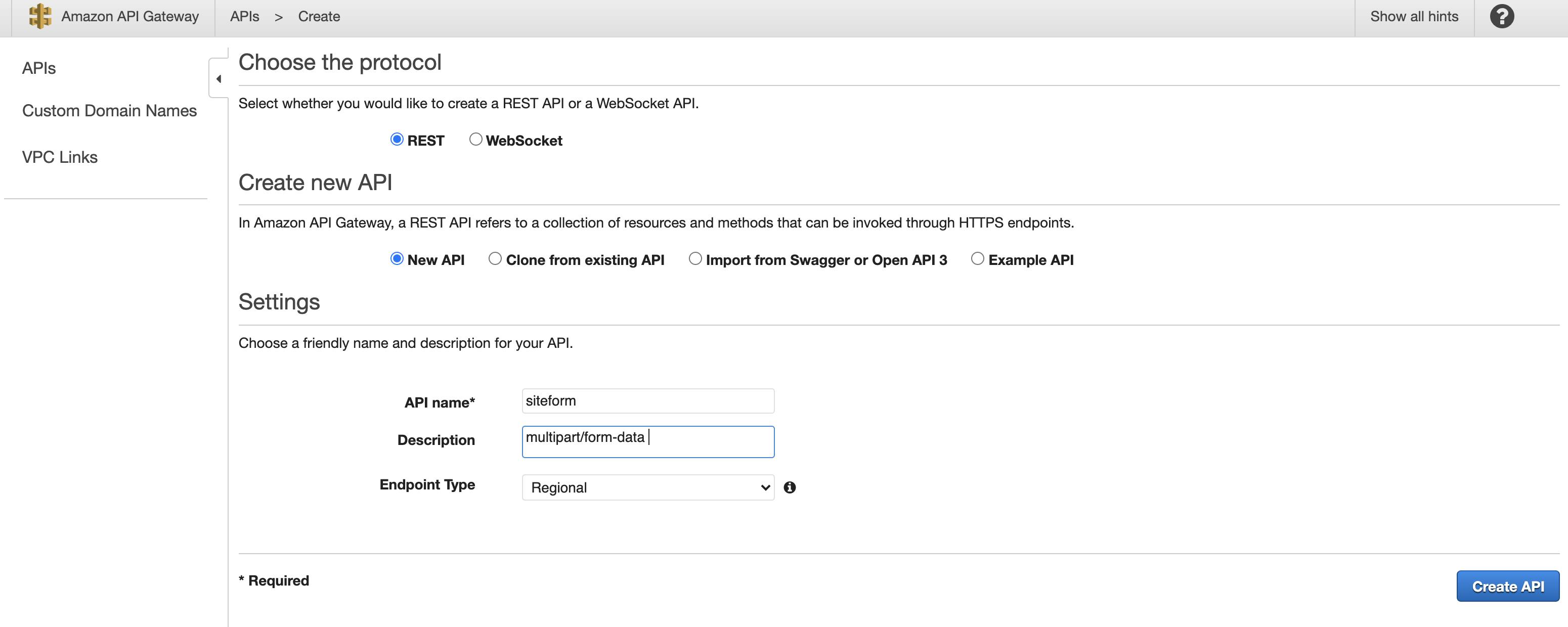

1) Create a regional REST API.

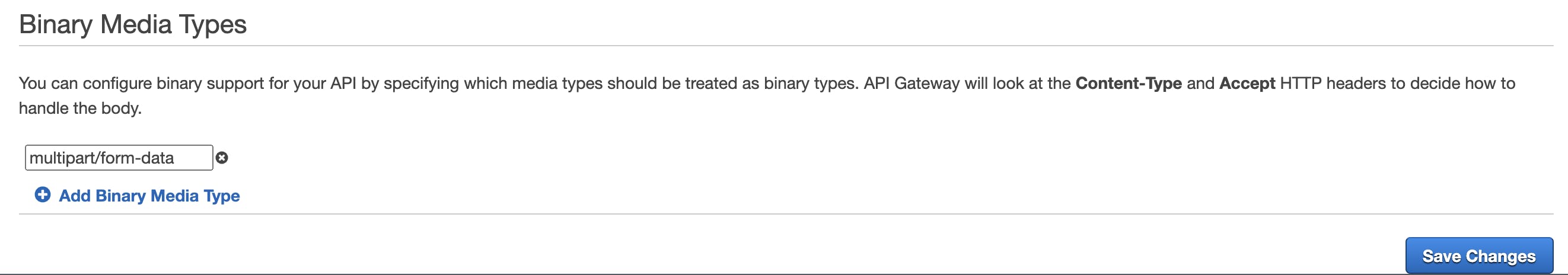

2) Under the "API Gateway" settings:

Add "multipart/form-data" under Binary Media Types.

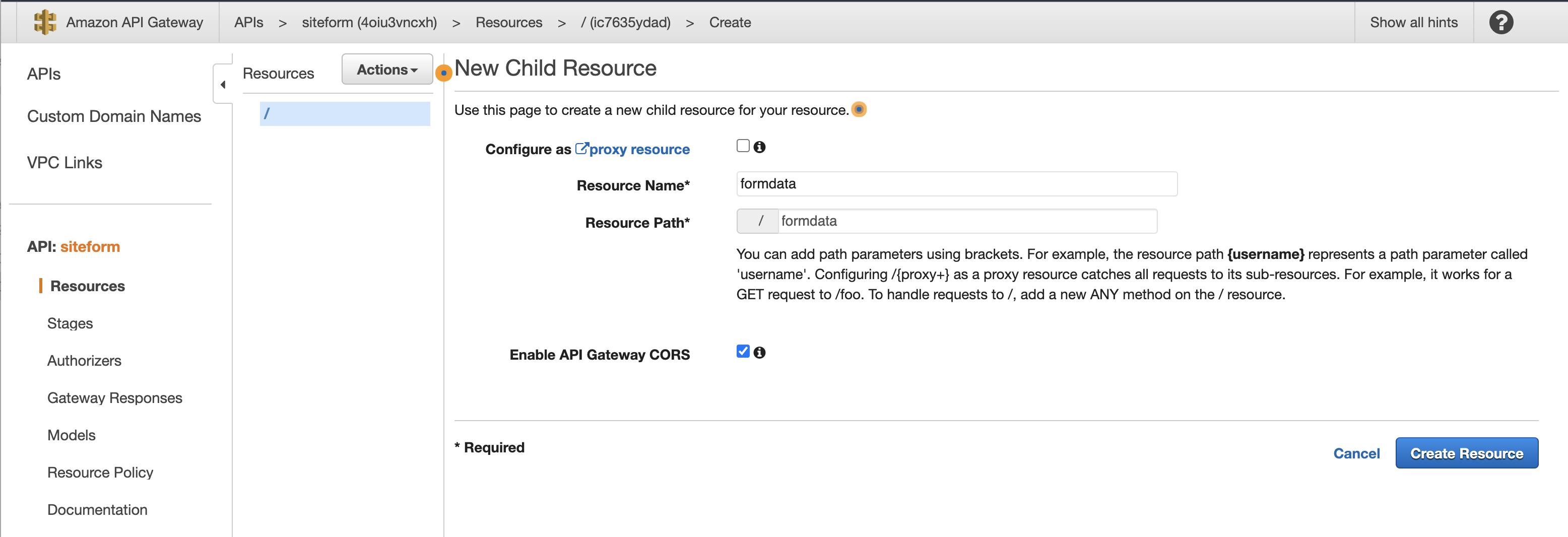

3) Add a "resource" and enable "CORS".

4) Create a type "Post" method and add the Lambda we created earlier.

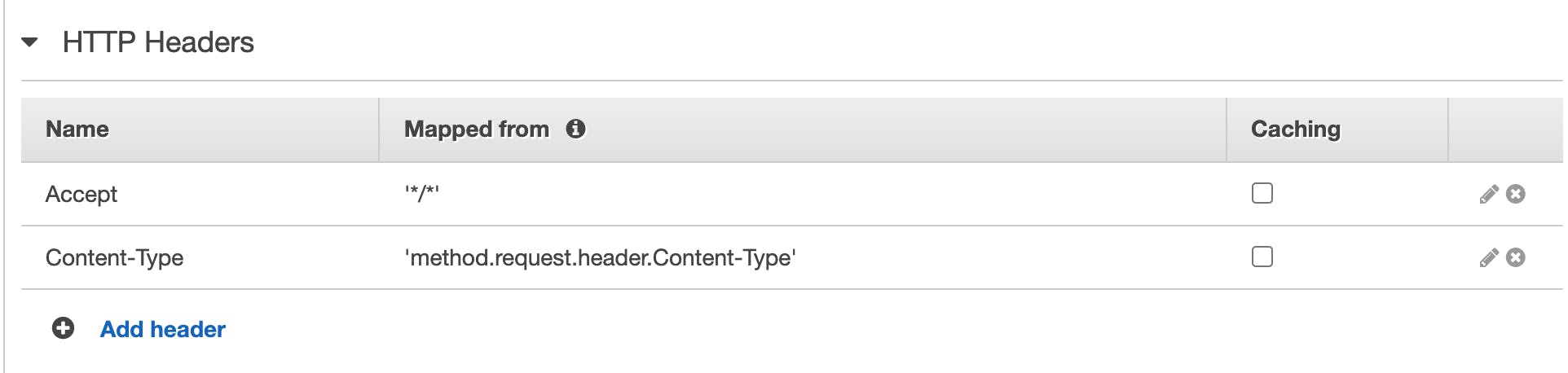

5) Click on the "Integration Request"

6) Under "HTTP Headers" add two entires as below:

Accept: '*/*'

Content-Type: 'method.request.header.Content-Type'

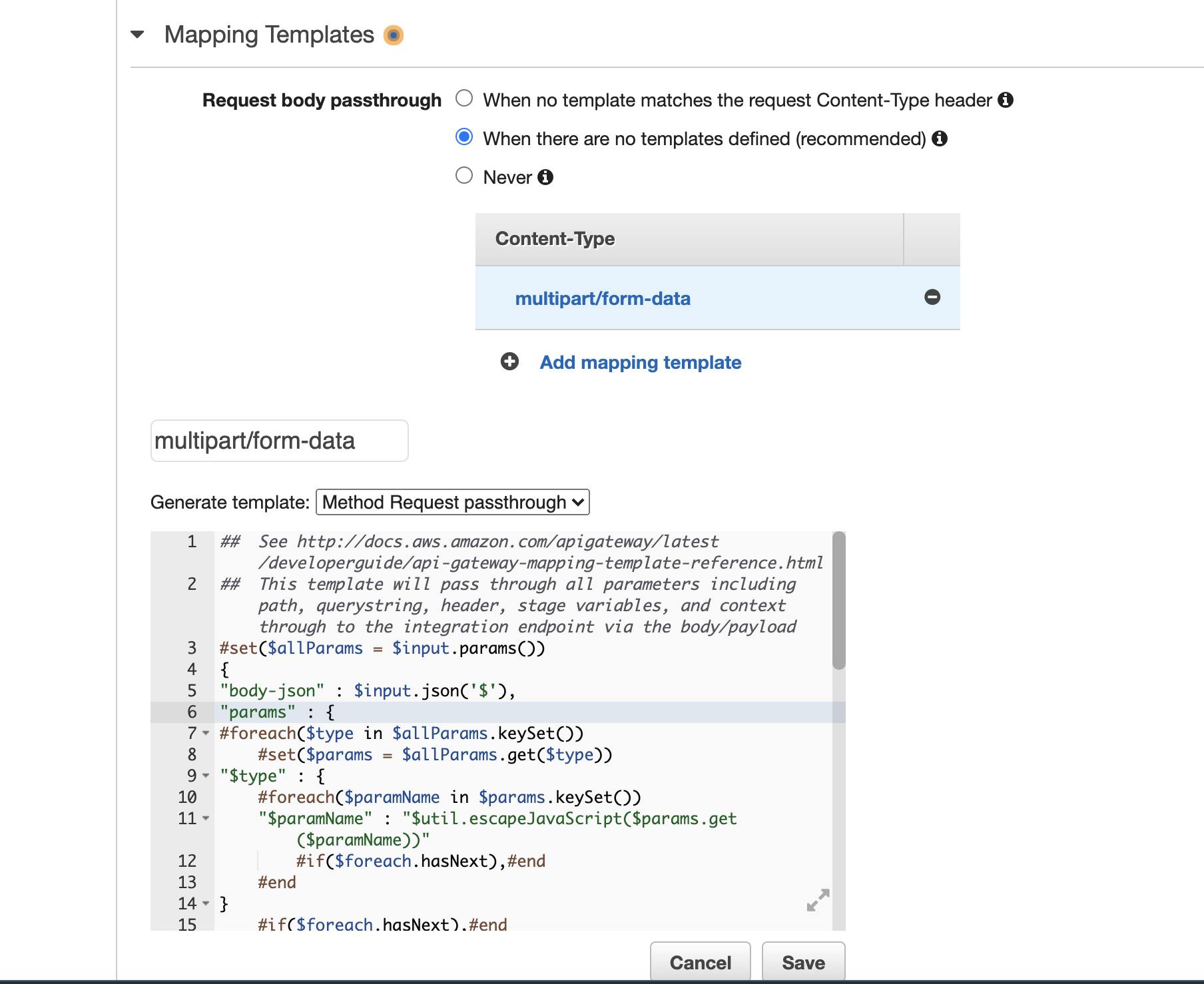

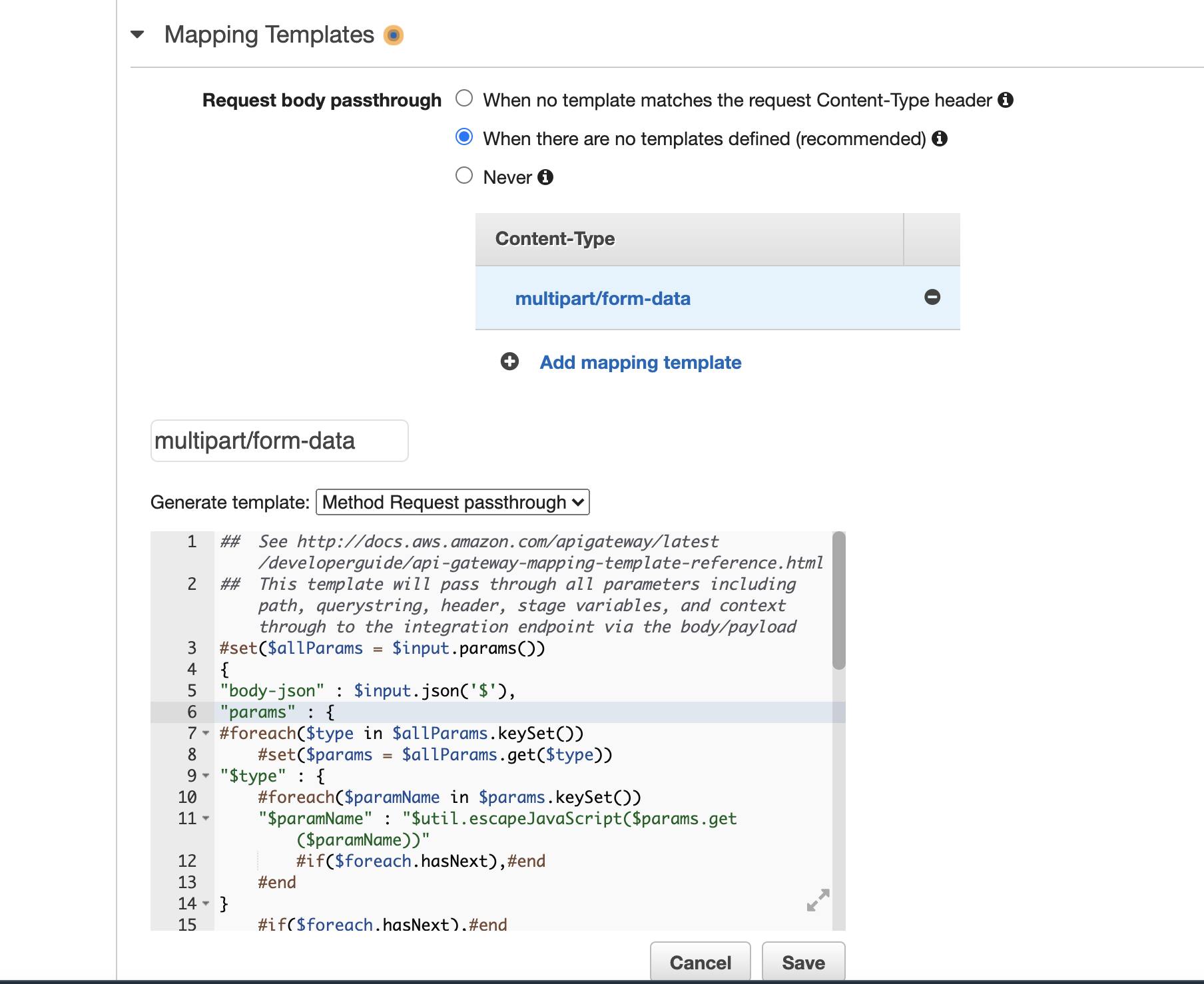

7) Under "Mapping Templates", select "When there are no templates defined (recommended)" Click on "Add mapping template" and add "multipart/form-data".

8) Select "multipart/form-data" and under "Generate template", select "Method Request passthrough". In my lambda example, I used the key of "body" instead of "body-json". Line 5 of the template.

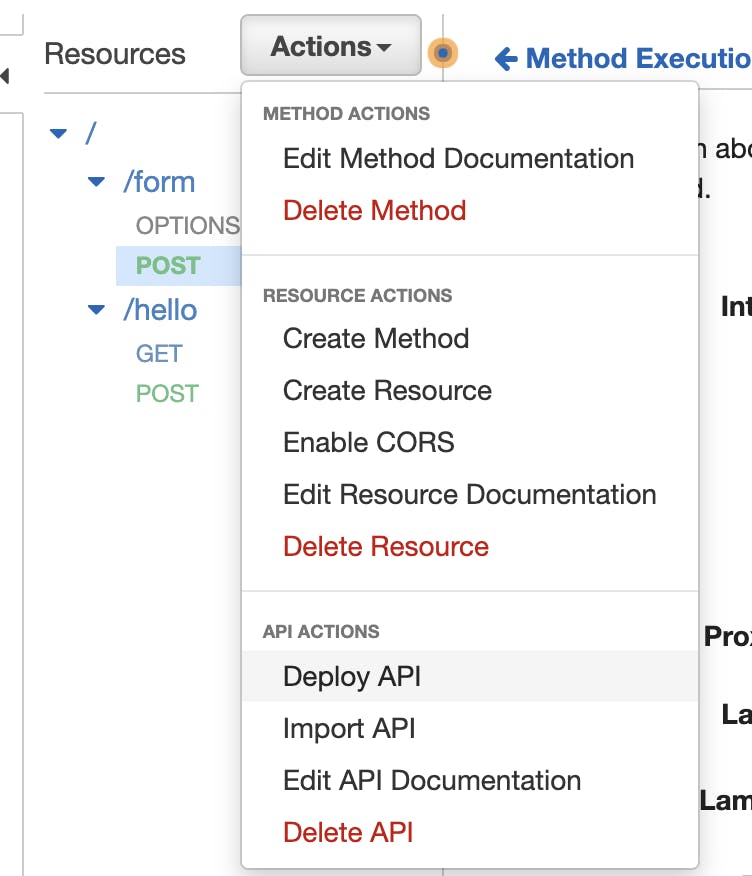

9) Save the changes and deploy the API gateway by clicking on the "Deploy API".

At this stage, if all the mappings are correct, Lambda should write the data to DynamoDB and S3. The examples are not production ready and should be used as a reference for handling multipart/form-data via AWS serverless architecture.

I hope you've found this article useful. Follow me if you are interested in:

- Python

- AWS Architecture & Security.

- AWS Serverless solutions.